Growth of AI could boost cybercrime and security

threats, report warns

Experts

say action must be taken to control artificial intelligence tech

A ‘Mikado’ German forces drone

in Munster. It is feared ‘drone swarms’ fitted with small explosives and

self-driving technology will be used to carry out untraceable assassinations in

the future. Photograph: Patrik Stollarz/AFP/Getty Images

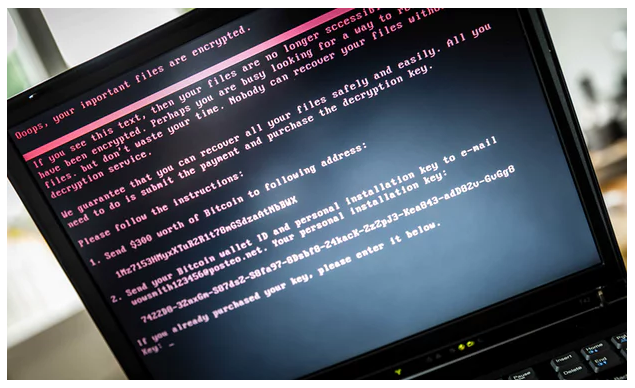

Wanton proliferation of artificial

intelligence technologies could enable new forms of cybercrime, political

disruption and even physical attacks within five years, a group of 26 experts

from around the world have warned.

In a new report, the academic, industry and

the charitable sector experts, describe AI as a “dual use technology” with

potential military and civilian uses, akin to nuclear power, explosives and

hacking tools.

“As AI capabilities become more powerful and

widespread, we expect the growing use of AI systems to lead to the expansion of

existing threats, the introduction of new threats and a change to the typical

character of threats,” the report says.

They argue that researchers need to consider

potential misuse of AI far earlier in the course of their studies than they do

at present, and work to create appropriate regulatory frameworks to prevent

malicious uses of AI.

If the advice is not followed, the report

warns, AI is likely to revolutionise the power of bad actors to threaten

everyday life. In the digital sphere, they say, AI could be used to lower the

barrier to entry for carrying out damaging hacking attacks. The technology

could automate the discovery of critical software bugs or rapidly select

potential victims for financial crime. It could even be used to abuse

Facebook-style algorithmic profiling to create “social engineering” attacks

designed to maximise the likelihood that a user will click on a malicious link

or download an infected attachment.

The increasing influence of AI on the

physical world means it is also vulnerable to AI misuse. The most widely

discussed example involves weaponising “drone swarms”, fitting them with small

explosives and self-driving technology and then setting them loose to carry out

untraceable assassinations as so-called “slaughterbots”.

‘AI is going to be extremely beneficial, and already is,

to the field of cybersecurity,’ says Dmitri Alperovitch, the co-founder of

information security firm CrowdStrike. Photograph: Rob Engelaar/EPA

Political disruption is just as plausible,

the report argues. Nation states may decide to use automated surveillance

platforms to suppress dissent – as is already the case in China, particularly for the Uighur people in the nation’s

northwest. Others may create “automated, hyper-personalised

disinformation campaigns”, targeting every individual voter with a distinct set

of lies designed to influence their behaviour. Or AI could simply run

“denial-of-information attacks”, generating so many convincing fake news

stories that legitimate information becomes almost impossible to discern from

the noise.

Seán Ó hÉigeartaigh of the University of Cambridge’s

centre for the study of existential risk, one of the report’s authors, said:

“We live in a world that could become fraught with day-to-day hazards from the

misuse of AI and we need to take ownership of the problems – because the risks

are real. There are choices that we need to make now, and our report is a

call-to-action for governments, institutions and individuals across the globe.

“For many decades hype outstripped fact in

terms of AI and machine learning. No longer. This report … suggests broad

approaches that might help: for example, how to design software and hardware to

make it less hackable – and what type of laws and international regulations

might work in tandem with this.”

Not everyone is convinced that AI poses such

a risk, however. Dmitri Alperovitch, the co-founder of information security

firm CrowdStrike, said: “I am not of the view that the sky is going to come

down and the earth open up.

“There are going to be improvements on both

sides; this is an ongoing arms race. AI is going to be extremely beneficial,

and already is, to the field of cybersecurity. It’s also going to be beneficial

to criminals. It remains to be seen which side is going to benefit from it

more.

“My prediction is it’s going to be more

beneficial to the defensive side, because where AI shines is in massive data

collection, which applies more to the defence than offence.”

The report concedes that AI is the best

defence against AI, but argues that “AI-based defence is not a panacea,

especially when we look beyond the digital domain”.

“More work should also be done in

understanding the right balance of openness in AI, developing improved

technical measures for formally verifying the robustness of systems, and

ensuring that policy frameworks developed in a less AI-infused world adapt to

the new world we are creating,” the authors wrote.